NORWAY

Current Trends and Challenges in the Legal Framework

Samson Y. Esayas and Mathias K. Hauglid

Abstract

This chapter explores Norway's public digitalisation efforts, assessing the effectiveness of legislative and policy measures in advancing the public sector's digitalisation and examining the adequacy of safeguards for fundamental rights. Norway stands out for its highly digitalised public sector, a result of strategic legislative and policy initiatives promoting a digital-friendly environment. We pinpoint three key areas of focus in these endeavours.

First, there have been numerous legislative initiatives enabling profiling and automated decision-making in public agencies. While driven by efficiency objectives, these initiatives tend to be seen as tools to promote equal treatment. Second, changes have been made to counter challenges in data reuse hindering digital transformation and Artificial Intelligence (AI) implementation. Third, the advocacy for regulatory sandboxes emerges as a powerful force for experimentation and learning, with platforms like the Sandbox for Responsible AI setting examples.

Despite the progress, challenges persist. Firstly, most initiatives focus on enabling decisions via hard-coded software, often neglecting advanced AI systems designed for decision support. Secondly, discretionary criteria in public administration law and semantic discrepancies across sector-specific regulations continue to be a stumbling block for automation and streamlined service delivery. Importantly, few laws directly tackle the challenges digitalisation presents to fundamental democratic values and rights, due to a fragmented, sector-focused approach.

Furthermore, we assess the AI Act's potential to facilitate AI implementation while redressing national law gaps concerning human rights and boosting AI use in public agencies. The Act places public administration under sharp scrutiny, as the bulk of the prohibitions and high-risk AI applications target the public sector’s use of AI. This focus promises to enhance the protection of individuals in this domain, especially concerning transparency, privacy, data protection, and anti-discrimination. Yet, we identify a potential conflict between the AI Act and a tendency in the Norwegian legal framework to restrict the use of AI for certain purposes.

Finally, we put forth recommendations to boost digitalisation while safeguarding human rights. Legislative actions should pave the way for the integration of advanced AI systems intended for decision support. There is a need for coordination of sector-specific initiatives and assessment of their impact on fundamental rights. To amplify these national endeavours, we point out areas where cross-border collaborations in the Nordic-Baltic regions could be vital, emphasizing data sharing, and learning from successful projects. Regulatory sandboxes offer another promising avenue for collaboration. With its considerable experience in sandboxes tailored for responsible AI, Norway stands as a beacon for other nations in the Nordic and Baltic regions.

1. Overview of Public Sector and Digitalisation Projects

Norway stands as one of the countries with a highly digitalised public sector, ranked no. 5 in the European Commission’s 2022 Digital Economy and Society Index. While this section broadly covers Norway's efforts in public sector digitalisation, it places particular emphasis on the implementation of AI technologies. This aspect of digitalisation is arguably the most significant transformation currently occurring in how public sector services and decisions are conducted, with profound implications for safeguarding fundamental rights and upholding the values of the Norwegian democracy.

1.1 Organization of the Public Sector

Norway is a constitutional democracy.

Konstitusjonelt demokrati. / Smith, Eivind. 5th ed. 2021, p. 30.

The Norwegian Constitution, Article 3.

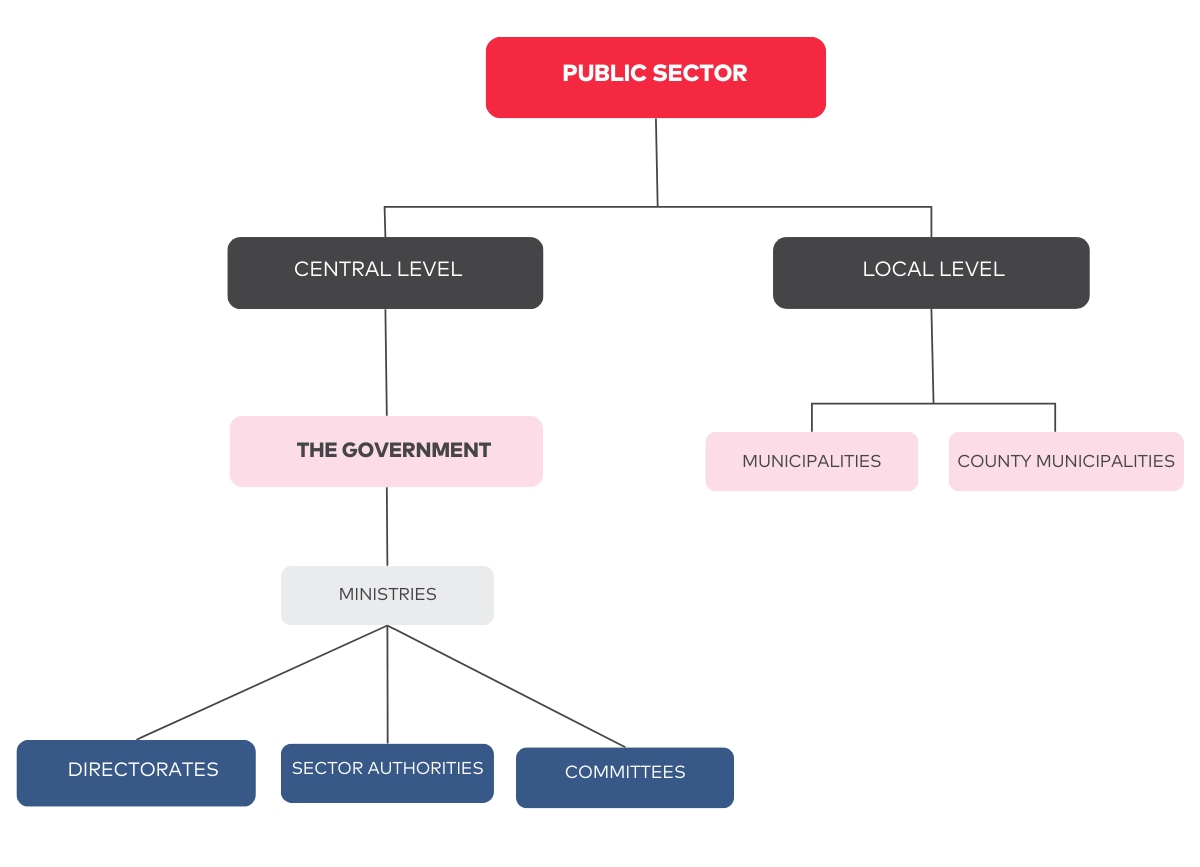

The central administration consists of the government, ministries, and directorates, which govern units at the regional and local levels. The division of the central administration into various administrative bodies is primarily based on policy areas or tasks, not on geographical criteria. Various supervisory authorities and other sector-specific authorities are typically organized under the respective ministries. In addition, there are some collegial bodies (committees) with specific and limited functions, such as acting as an appellate body or advisory body on certain matters. A higher-level body can normally instruct subordinate bodies in the organizational hierarchy, both generally and in individual cases. As a main rule, however, the central administration bodies cannot instruct the local administration (municipalities and county municipalities).

Figure 1. Organization of the Norwegian public sector.

1.2 Implemented and Planned Projects

1.2.1 Overview

Norway is at the forefront of digitalizing its public services, with a dedicated Directorate for Digitalisation (Digdir) driving the initiatives in the public sector. While there is a vast array of digitization projects within the public sector, certain projects have garnered widespread attention. Since 2019, Digdir has recognized and awarded projects that showcase the potential of digitalisation. To receive the award, projects must be ‘innovative and contribute to a better and more efficient public sector - and to an easier everyday life for citizens’.

Her er årets tre beste offentlige innovasjoner. / Directorate for Digitalisation (Digdir) 30 May 2022 https://www.digdir.no/digitaliseringskonferansen/her-er-arets-tre-beste-offentlige-innovasjoner/3615. All links are last accessed 05 October 2023.

Moreover, the Norwegian Artificial Intelligence Research Consortium (NORA) and Digdir established a comprehensive database that provides an overview of both ongoing and completed AI projects in the public sector.

Kunstig intelligens – oversikt over prosjekter i offentlig sektor. / Felles datakatalog, Directorate for Digitalisation (https://data.norge.no/kunstig-intelligens).

Kunstig intelligens – oversikt over prosjekter i offentlig sektor. / Felles datakatalog, Directorate for Digitalisation (https://data.norge.no/kunstig-intelligens).

The creation of this database is a first good step towards promoting transparency and accountability in the public sector's use of AI. It contributes to a transparent public sector, giving citizens and other stakeholders insight into how AI is used. Additionally, the database plays a crucial role in reducing redundant efforts and facilitating the exchange of best practices on how to use AI. This not only ensures the efficient use of resources but also contributes to the responsible use of AI in the public sector. In the following, we highlight some projects that have gained attention and are also relevant from a regulatory respective. Before proceeding further, it is apt to highlight the specific areas where AI is being employed by public agencies.

In a 2022 survey conducted by Vestlandsforsking and commissioned by the Directorate for Children, Youth, and Family, various applications of AI within public agencies were examined.

Bruk av Kunstig Intelligens i Offentlig Sektor og Risiko for Diskriminering. / VF-Rapport nr. 7-2022. Vestlandsforsking, 2022, p. 30–31 (hereinafter VF-Rapport nr. 7-2022).

- Data Quality Enhancement: The first area focuses on using rule-based AI to augment the integrity of datasets. Rather than processing the data, AI algorithms are employed to identify and rectify errors within datasets, which may contain personal information.

- Error Detection and User Experience: AI is also deployed to uncover gaps or inaccuracies in systems, aiming to enhance user interaction with various services. By providing predictive recommendations, AI helps users avoid making mistakes. These projects typically use highly explainable models, and the datasets may contain individually identifiable information recast as event descriptions.

- Organizational Needs Prediction: AI assists in forecasting internal needs within an organization, such as predicting employee absence rates. The ultimate goal is system optimisation. Explainable models are the technology of choice here, working with data that may include individual records.

- Fraud and Misuse Detection: Some projects employ 'black-box' AI models to reveal suspicious patterns within systems. The primary objective is to flag misuse, and the data involved may encompass personal and contact details.

- User Behavior Prediction in Welfare Services: AI is utilised to anticipate the behaviour of welfare service users, aiming to enhance accessibility and minimise fraudulent use. AI systems with explainable models analyze data that has been converted into event descriptions.

- Medical Treatment Applications: In healthcare settings, AI plays a role in patient treatment, such as image-based diagnostics. Machine learning algorithms analyze individual data for this purpose.

- Synthetic Test Data Analysis: One specialized project focuses on the use of machine learning for generating and analyzing synthetic test data.

- Case Handling Support: Lastly, AI systems with explainable models aid case handlers in streamlining the case management process, making decision-making more efficient and reliable.

In the following, we describe a selection of digitalisation projects, with a particular focus on AI technologies that have been implemented or are planned within the Norwegian public sector.

1.2.2 Implemented Projects

1.2.2.1 Automating decisions on citizenship applications

The Norwegian Directorate for Immigration (UDI) won the 2022 prize for best public digitalisation project for its work in automating decisions in the handling of citizenship applications.

Automatisering kutter ventetiden for å bli norsk. / Directorate for Digitalisation (Digdir) 16 August 2022 https://www.digdir.no/digitaliseringskonferansen/automatisering-kutter-ventetiden-bli-norsk/3780

Automatisering kutter ventetiden for å bli norsk. / Directorate for Digitalisation (Digdir) 16 August 2022 https://www.digdir.no/digitaliseringskonferansen/automatisering-kutter-ventetiden-bli-norsk/3780

Automatisering kutter ventetiden for å bli norsk. / Directorate for Digitalisation (Digdir) 16 August 2022 https://www.digdir.no/digitaliseringskonferansen/automatisering-kutter-ventetiden-bli-norsk/3780

1.2.2.2 Using AI for Residential Verification by the Norwegian State Educational Loan Fund

The Norwegian State Educational Loan Fund (Lånekassen) successfully utilised machine learning to select candidates for ‘residential verification—a process to confirm the addresses of students claiming to live away from their parents' home. In 2018, out of 25,000 students verified, 15,000 were chosen through AI, while 10,000 were randomly selected. The AI method proved more effective, with 11.6% failing the verification, compared to 5.5% from the control group.

One Digital Public Sector: Digital Strategy for the Public Sector 2019–2025. Ministry of Local Government and Modernisation. 2019 (hereinafter Digital Strategy for the Public Sector 2019–2025) p. 23.

1.2.2.3 Vestre Viken Health Region’s Use of AI Medical Image Analysis

Medical image analysis is one of the tasks at which AI systems are currently performing well. Internationally, radiology stands out as an area within medicine where AI systems are most frequently implemented. One of the first implementations of an AI system for diagnosis based on image analysis in Norway took place in 2023 when a hospital in the Vestre Viken health region started using an AI system for the analysis of images from patients suspected of suffering from minor bone fractures. The main benefit of implementing the AI system is time and resource efficiency: the time from taking an image to receiving the result is said to decrease from hours to 1–2 minutes.

Er vi forberedt på å la maskinene behandle oss? / Topdahl, Rolv Christian, Mullis, Magnus Ekeli, and Nøkling, Anders. NRK, 25 September 2023 https://www.nrk.no/rogaland/xl/snart-vil-kunstig-intelligens-analysere-kroppen-din_-_-vi-er-for-darlig-forberedt-1.16553955

1.2.3 Planned Projects

1.2.3.1 The NAV AI Sandbox Project to Predict Duration of Sickness Absence

In Spring 2021, NAV (the Norwegian Labour and Welfare Administration) collaborated with the Data Protection Authority's AI sandbox initiative.

Exit Report from Sandbox Project with NAV Themes: Legal Basis, Fairness and Explainability. / Datatilsynet. 03 January 2022 https://www.datatilsynet.no/en/regulations-and-tools/sandbox-for-artificial-intelligence/reports/nav---exit-report/

Exit Report from Sandbox Project with NAV Themes: Legal Basis, Fairness and Explainability. / Datatilsynet. 03 January 2022 https://www.datatilsynet.no/en/regulations-and-tools/sandbox-for-artificial-intelligence/reports/nav---exit-report/ p. 4.

The objective of this sandbox project was to assess the legality of using AI in such a context and find ways on how profiling persons on sick leave can be performed in a fair and transparent manner.

Exit Report from Sandbox Project with NAV Themes: Legal Basis, Fairness and Explainability. / Datatilsynet. 03 January 2022 https://www.datatilsynet.no/en/regulations-and-tools/sandbox-for-artificial-intelligence/reports/nav---exit-report/ p. 3.

Ditt personvern – vårt felles ansvar Tid for en personvernpolitikk. / Norges offentlige utredninger (NOU) 2022: 11, Rapport fra Personvernkommisjonen, 26 September 2022 (hereinafter NOU 2022:11), p. 67.

1.2.3.2 Digitalising the right to access

The project aims to create a platform that gives citizens an overview, insight and increased control over their own personal data. This initiative is a crucial component of the government's Digital Agenda, specifically focusing on the ‘Once-Only Principle’, which aims to facilitate the delivery of seamless, proactive services while also promoting data-driven innovation and a user-centric experience.

Digital Strategy for the Public Sector 2019–2025, p. 28.

The first pivotal element is the creation of the National Data Directory, which serves as a foundational step toward achieving the ‘Once-Only Principle.’

Digital Strategy for the Public Sector 2019–2025, p. 21.

The second focus area involves centralizing both guidance for public agencies and the option for citizens to request information access, all in a single platform. This approach puts the citizen at the forefront of public data management. Additionally, there is a proposal to standardize how public entities should respond to access requests, thereby creating a uniform experience for citizens. The third focus area specifically deals with citizens' ability to access and share their own personal information. The aim here is to amplify data sharing by granting citizens the ability to use their own data for various purposes. One proposed strategy is to delineate a set of core data elements—such as driver's licenses, academic diplomas, or income records—over which citizens can have varying degrees of control.

1.2.3.3 Several ongoing AI initiatives in the healthcare sector

While the Norwegian healthcare sector is often criticized for lagging in terms of digitalisation, several innovative projects pertaining to AI technologies are currently in motion. One such initiative is underway at Akershus University Hospital (Ahus), Norway's most expansive emergency hospital. Ahus is planning to develop an algorithm that predicts heart failure risks, utilizing factors such as ECG measurements as its foundation. This tool, designed for clinical settings, aims to enhance patient care by facilitating timely assessments and treatments, particularly for those exhibiting higher heart failure probabilities.

Moreover, at the University Hospital of North Norway (UNN), a project is underway to develop an AI system intended to support decisions on whether a patient should have spine surgery.

In the interest of disclosure, it is noted that one of the authors of this chapter (Hauglid) has been involved in one of the ‘work packages’ pertaining to initial stages of the spine surgery project.

In another noteworthy endeavour by the Bergen Municipality, there is a focus on forecasting stroke risks using data from emergency calls and preceding hospital contacts. This project is structured in three distinct phases. Initially, a comprehensive survey will analyze the healthcare interactions stroke patients in Helse Bergen have had prior to their admission and subsequent entry into the Stroke Register. Following this, the second phase emphasizes the development of an AI model. This model will be informed by an intricate analysis of emergency (‘113’) call data and structured datasets from the Norwegian patient register. Once developed, the final phase involves integrating the AI model at the Emergency Department at Haukeland University Hospital Bergen to determine if the AI's inclusion boosts the accuracy of stroke diagnoses. The goal transcends stroke predictions, with aspirations to implement AI assistance in diagnosing other acute medical conditions, including heart attacks and sepsis.

1.2.3.4 Government commits one billion NOK to bolster AI research

On September 7th, 2023, the government pledged one billion Norwegian kroner (approximately 94 million USD) to strengthen research in AI and digital technology over the coming five years.

Regjeringen med milliardsatsing på kunstig intelligens. Regjeringen, Pressemelding 07 September 2023 https://www.regjeringen.no/no/aktuelt/regjeringen-med-milliardsatsing-pa-kunstig-intelligens/id2993214/

- Delving into the societal repercussions of AI and various digital technologies, with a spotlight on their influence on democracy, trust, ethics, economy, rule of law, regulations, data protection, education, arts, and culture.

- Undertaking research centred on digital technologies, which encompasses fields like artificial intelligence, digital security, next-generation ICT, novel sensor technologies, and quantum computing.

- Exploring the potential of digital technologies to foster innovation in both public and private spheres. This also includes studying the ways AI can be intertwined with research spanning diverse academic disciplines.

2. Overview of the Legal Framework in Supporting Digitization, Values and Rights

2.1 Relevant Legal Framework for the Protection of Human Rights

2.1.1 Human Rights and the Norwegian Constitution

Since the very adoption of the Norwegian Constitution in 1814, certain foundational principles resembling a modern understanding of human rights have found their place therein as citizen rights. These include the right to freedom of expression, the right to property, a prohibition of torture and a prohibition against arbitrary house searches.

Norway ratified the European Convention of Human Rights (ECHR) in 1952 and incorporated the convention directly into Norwegian law in 1999, through the Norwegian Human Rights Act – a significant milestone in strengthening the status of human rights in Norwegian law. The Human Rights Act also incorporates the following UN conventions into Norwegian law: The Covenant on Economic, Social and Cultural Rights (CESCR), the Covenant on Civil and Political Rights (CCPR), the Convention on the Rights of the Child (CRC), and the Convention on the Elimination of All Forms of Discrimination against Women (CEDAW). Not only do these human rights instruments form an integral part of Norwegian law, they also take precedence over other provisions of Norwegian legislation in case of conflict. Moreover, Norway has ratified several UN human rights conventions such as the Convention on the Elimination of All Forms of Racial Discrimination (CERD) and the Convention on the Rights of Persons with Disabilities (CRPD).

The status of human rights in Norwegian law was further strengthened by a reform of the Constitution in 2014. The reform elevated several human rights to explicit recognition at the constitutional level. A new chapter in the Constitution now amounts to what can be likened to a ‘bill of rights’ for Norway.

Norges Høyesterett, Grunnloven og menneskerettighetene. / Bårdsen, Arnfinn. Menneskerettighetene og Norge. ed. / Andreas Føllesdal, Morten Ruud and Geir Ulfstein. Universitetsforlaget, 2017, p. 65. Vol. 1 1. ed. Universitetsforlaget, 2017, p. 65.

While the human rights that are now enshrined in the Constitution have been recognized in Norwegian law long before the constitutional reform, the elevation to constitutional status signifies that these human rights are among the foundational values of the Norwegian constitutional democracy. To further underscore the status of human rights in Norway, the 2014 constitutional reform also introduced in the Constitution a general obligation for all authorities of the state to respect and ensure human rights.

Article 92 of the Norwegian Constitution.

Due to the status of human rights in Norwegian law, the jurisprudence of the European Court of Human Rights (ECtHR) is a significant source of interpretation when applying Norwegian law, including the constitutional human rights provisions.

Judgment of the Norwegian Supreme Court, 18.12.2014 (Rt. 2014 p. 1292), paragraph 14.

Judgment of the Norwegian Supreme Court, 19.12.2008 (Rt. 2008 p. 1764).

2.1.2 Norwegian Public Administration Law

The Norwegian public administration is governed by the 1967 Public Administration Act (PAA). The PAA lays down procedural rules that generally apply to administrative agencies and officials across all sectors. It operationalizes the foundational principles of Norwegian public administration law, such as freedom of information, the right to participation and contestation, the rule of law and legal safeguards for the individual citizen, neutrality, and proportionality.

Alminnelig forvaltningsrett. / Graver, Hans Petter. 4 ed.: Universitetsforlaget, 2015, chapters 4–8.

For example, the PAA sets forth the requirements as to a public official’s impartiality, the duty of confidentiality, information rights for parties involved in administrative cases, and the requirements pertaining to the preparation and provision of the grounds for an administrative decision that affects individual citizens. The PAA is supplemented by the 2006 Freedom of Information Act (FIA), which provides that the case documents, journals and registries of an administrative agency shall, as a main rule, be available to the public free of charge.

Article 3 FIA, cf. Article 8 FIA.

Article 9 FIA, cf. Article 28 FIA.

In addition to the PAA and the FIA, Norwegian public administration is regulated in more detail by sector-specific statutes. Over the years, the PAA and the sector-specific statutes have been amended several times, including piecemeal adaptations to accommodate the increased importance of digital technologies in the Norwegian public sector. An extensive effort was made in 2000, to amend regulations that prevented electronic communication between citizens and administrative agencies (the eRegulation project).

Article 15 a PAA.

Digital Strategy for the Public Sector 2019–2025, p. 11.

Norges offentlige utredninger (NOU) 2019: 5 Ny forvaltningslov (hereinafter NOU 2019: 5), p. 259.

Moreover, a proposal for a comprehensive reform of the PAA is currently being processed at a political level. Not surprisingly, the proposal addresses the need to facilitate digitalisation. The proposal is further discussed in section 3.3, where we identify certain trends in the legislative reforms related to the digitalisation of the Norwegian public sector and examine how this continuously evolving landscape promotes core principles and values of the Norwegian democracy while facilitating digitalisation.

2.2 Core Principles and Values Guiding Public Sector Digitalisation in Norway

Core principles and values for digitalisation in the Norwegian public sector are outlined in the 2019–2025 National Strategy for Digitalisation of the Public Sector. This strategy document is titled “One Digital Public Sector”, and alludes to the overarching objective of ensuring integrated, seamless and user-centric public services based on real-life events and an ‘only once’ principle. The goal is for users – citizens, and public and private enterprises – to perceive their interaction with the public sector as seamless and efficient, as ‘one digital public sector’.

Digital Strategy for the Public Sector 2019–2025, p. 13; Stortingsmelding nr. 27 (2015–2016) Digital agenda for Norge.

Norway’s current strategy for AI, announced in 2020, also emphasises the potential for enhancement of public services through digitalisation. It particularly depicts the implementation of AI technologies as a crucial element of future digitalisation efforts in the public sector. As regards the guiding principles and values for AI development and deployment, the strategy underscores, above all, that AI developed and used in Norway should adhere to ethical principles and respect human rights and democracy. The strategy relies heavily on the Guidelines for Trustworthy AI developed by the EU High-Level Expert Group on AI. These guidelines set out key ethical principles that there is considerable consensus about in the contemporary discourse around AI technologies.

The Global Landscape of AI Ethics Guidelines. / Anna Jobin, Marcello Ienca and Effy Vayena. In: Nature Machine Intelligence, No. 1, September 2019, p. 389–399; A Framework for Language Technologies in Behavioral Research and Clinical Applications: Ethical challenges, Implications and Solutions. / Catherine Diaz-Asper et al. In: American Psychologist, 2023 (the article is forthcoming and will be available, upon publication, via DOI: 10.1037/amp0001195.

Råd for ansvarlig utvikling og bruk av kunstig intelligens i offentlig sektor. / Directorate for Digitalisation, https://www.digdir.no/kunstig-intelligens/rad-ansvarlig-utvikling-og-bruk-av-kunstig-intelligens-i-offentlig-sektor/4272.

On the basis of the aforementioned documents, digitalisation and implementation of AI technologies in the Norwegian public sector is guided by the following core principles and values (the list is non-exhaustive):

- Privacy and data protection: Privacy and data protection are the most prominent concerns in policy documents relating to the digitalisation of the Norwegian public sector, including the National AI Strategy. There is a high level of awareness of the privacy and data protection risks associated with data sharing between public agencies and the use of data for AI training purposes.

- Human agency and oversight: The National AI Strategy emphasises that AI development should enhance rather than diminish human agency and self-determination.

- Technical robustness and safety: The concepts of robustness and safety in relation to AI and digitalisation encompass various aspects, including information security, human safety, and the safe use of AI. AI systems should not harm humans. To prevent harm, AI solutions must be technically secure and robust, safeguarded against manipulation or misuse, and designed and implemented in a manner that particularly considers vulnerable groups. AI should be built on technically robust systems that mitigate risks and ensure that the systems function as intended.

- Transparency and explainability: Transparency is a central element of the rule-of-law and in building trust in the administration, especially when new systems like AI are being deployed. An open decision-making process allows one to assess whether the decision was fair and also allows for the possibility of lodging complaints. The National Strategy for Digitalisation of the Public Sector emphasizes that the public sector ‘shall be digitalised in a transparent, inclusive and trustworthy way.’Digital Strategy for the Public Sector 2019–2025, p. 8.

- Non-Discrimination, equality, and digital inclusion: Concerns about discrimination have become more salient in the Norwegian digitalisation discourse in recent years, as it has been recognized that AI systems might discriminate against vulnerable groups. In relation to digitalisation not involving AI systems, the objective of non-discrimination has been heralded as an argument in favour of digitalisation because automated, rule-based systems are perceived as more ‘neutral’ than human assessments. However, AI technologies may display biases that could lead to discrimination. Recognising this problem, the Norwegian Equality and Anti-Discrimination Ombud released a guidance document on ‘innebygd diskrimineringsvern,’ in November 2023.

- Accountability: While accountability has arguably not been at the forefront of the Norwegian discourse on digitalisation and AI implementation, this principle is emphasised in the EU’s principles for trustworthy AI and has been enshrined in the National AI Strategy. In the Strategy, accountability is explained as an overarching requirement pertaining to the need to implement AI solutions that enable external review.National AI Strategy, p. 60.

- Environmental and societal well-being: Environmental and societal well-being is an important political and legislative principle guiding digitalisation efforts in Norway. Article 112 of the Norwegian Constitution protects the right to a healthy, productive and diverse environment. This article emphasizes the duty of the state to ensure both current and future generations' right to a healthy environment and provides citizens with a right to information concerning the state of the natural environment and the effects of planned or implemented measures. This provision has been the subject of a lively societal debate in Norway in recent years – a debate that has been driven particularly by a lawsuit from two environmental organizations unsuccessfully seeking to invalidate a governmental decision to allocate petroleum extraction licenses on the Norwegian continental shelf.

In section 3, we refer to these principles as we assess the adequacy of the current and emerging legal framework in terms of its ability to support digitalisation while ensuring the governing principles and rights. Before proceeding, it is worth noting that while there is a certain level of agreement on the core principles, it is inescapable that these principles cannot be equally satisfied in all circumstances. For example, it is often recognised that the maximisation of an AI system’s accuracy might not be compatible with the maximisation of explainability.

Ethics and Governance of Artificial Intelligence for Health: WHO Guidance. / World Health Organization, Geneva, 2021.

3. Adequacy of the Legal Framework in Supporting Digitalisation, Values and Rights

3.1 Adequacy of Current (or Emerging) Framework in Supporting Digitalisation

This section explores ongoing legislative efforts in Norway to facilitate public sector digitalisation. We identify two primary categories of legislative changes driving these initiatives: those related to data sharing and reuse, and those governing the use of automated data processing and decision-making technologies. Furthermore, we examine the extent to which the Norwegian framework accommodates pilot schemes and regulatory sandboxes, which are pivotal to the adaptation of new technologies.

3.1.1 Ongoing Legislative Efforts

As mentioned earlier, Norway stands as one of the countries with a highly digitalised public sector. This is partly due to concerted efforts to adapt existing legal frameworks to be more conducive to digitalisation. Electronic communication between public administration and citizens is particularly facilitated by the current legal framework. However, we expect that future legislative efforts will contain more specific regulations aimed at fostering further digital transition. Furthermore, continuous efforts are being undertaken to overcome any obstacles to public sector digitalisation.

In this section, we describe significant legislative efforts that have been made or proposed to facilitate public sector digitalisation. According to the Law Commission on the PAA, regulations should be ‘clear and understandable, without undue complexity or unnecessary discretionary provisions.’

NOU 2019: 5, p. 102.

The ongoing reform of the PAA stands out as an obvious venue for the facilitation of public sector digitalisation in Norway. The proposal for a new PAA takes a balanced approach to digitalisation, highlighting opportunities and risks. As regards risks, the proposal is particularly concerned with the privacy of citizens. Hence, it underscores the need to ensure that the processing of personal data is based on purpose limitation and proportionality. While the comprehensive PAA reform could take years to implement, certain piecemeal adaptations of sector-specific legislation have been enacted in recent years. In the following, we consider the main digitalisation efforts in Norwegian law, including the PAA proposal as well as some of the sector-specific changes that have been proposed, to give an overview of the extent to which the current/emerging legal framework supports digitalisation. As mentioned, our principal emphasis is on the facilitation of AI technologies.

3.1.2 Data Sharing and Data Reuse

Regulations pertaining to the use or reuse of data are often highlighted as barriers to digitalisation and, particularly, AI development, in Norway. For example, the Law Commission on the PAA notes the difficulty of implementing cohesive services without sharing data across agencies.

Digital Strategy for the Public Sector 2019–2025, p. 18.

National AI Strategy, p. 27; Consultation Memorandum of the Justis- og beredskapsdepartementet (Ministry of Justice and Public Security), September 2020, Ref. No. 20/4064.

Regulation 17 June 2022 No. 1045 (Forskrift om deling av taushetsbelagte opplysninger og behandling av personopplysninger m.m. i det tverretatlige samarbeidet mot arbeidslivskriminalitet (a-kriminformasjonsforskriften).

Moreover, as regards data sharing, the National AI strategy particularly notes how current regulations ‘provide no clear legal basis for using health data pertaining to one patient to provide healthcare to the next patient unless the patient gives consent.’

National AI Strategy, p. 23.

VF-Rapport nr. 7-2022, p. 47.

In sector-specific legislation, certain rules have been introduced in response to concerns about limitations on the access to data as barriers to digitalisation and AI development. Notably, a specific provision concerning the possibility of applying for permission to use health data for the purposes of developing and using clinical decision support systems was added to the Health Personnel Act in 2021. In the preparatory works, the Ministry of Health acknowledges that the permissibility of using health data for these purposes was ambiguous before this. The new provision is an example of how the use of special categories of personal data (as per Article 9 GDPR) can be regulated at the national level. It was relied on in the Ahus sandbox project mentioned in section 1.2.

A Good Heart for Ethical AI: Exit Report for Ahus Sandbox Project (EKG AI). Theme: Algorithmic Bias and Fair Algorithms. / Norwegian Data Protection Authority (Datatilsynet), February 2023 (https://www.datatilsynet.no/en/regulations-and-tools/sandbox-for-artificial-intelligence/reports/ahus-exit-report-a-good-heart-for-ethical-ai/objective-of-the-sandbox-project/).

3.1.3 Facilitation of Automated Processing and Decision-Making

The potential for automation of administrative case handling is highlighted in the 2019 PAA proposal. As mentioned in section 1, several examples of automated processing already exist in the Norwegian public sector.

See also NOU 2019: 5, p. 259.

NOU 2019: 5, p. 174

As regards the need for a legal basis in national law for fully automated decision-making, pursuant to Article 22 GDPR, the 2019 PAA proposal suggests a general provision according to which the Government is given the authority to issue regulations governing the use of fully automated decision-making in specific types of cases. However, decisions that do not have important restrictive impacts on the rights and interests of an individual can rely on fully automated means, according to the proposal.

NOU 2019: 5, p. 263.

Regulating Automated Decision-Making: An Analysis of Control over Processing and Additional Safeguards in Article 22 of the GDPR. / Mariam Hawath. In: European Data Protection Law Review, Vol. 7, No. 2, 2021, p. 161–173.

One example of a provision facilitating fully automated decision-making is found in § 11 of the 2014 Norwegian Patient Journal Act. According to this provision, certain decisions can be based solely on automated processing of personal data, when the decision is of minor impact to the individual. In the preparatory works, which are important sources of legal interpretation in Norway, decisions concerning small monetary amounts are mentioned as an example of minor impact decisions.

Prop. 91 L (2021–2022) Endringer i pasientjournalloven mv. (hereinafter ‘Prop. 91 L (2021–2022)), p 43.

Prop. 91 L (2021–2022) Endringer i pasientjournalloven mv. (hereinafter ‘Prop. 91 L (2021–2022)), p 43.

A similar example is found in provisions added simultaneously to the 1949 Norwegian Act on the State Pension Fund (Statens pensjonskasseloven) (§ 45 b), the 2006 Act on the Norwegian Labour and Welfare Administration (NAV-loven), and the 2016 Norwegian Tax Administration Act, in 2020 and 2021. These provisions permit the State Pension Fund, NAV, and the Tax Administration, to make decisions based on fully automated processing of personal data, given that such decision-making is compatible with the right to data protection and is not based on criteria that require the exercise of decisional discretion. An exception from the latter restriction is applicable for decisions where the outcome is not questionable.

Act 28 July 1949 No. 26 on the State Pension Fund, § 45 b, second indent; Act 16 June 2006 No. 20 on the Labour and Welfare Administration, § 4 a, second indent.

Prop. 135 L (2019–2020) Endringer i arbeids- og velferdsforvaltningsloven, sosialtjenesteloven, lov om Statens pensjonskasse og enkelte andre lover (hereinafter ‘Prop. 135 L), p. 20.

Prop.135 L (2019–2020), p. 58 and 60.

In addition to providing a limited basis for automated decision-making, the Norwegian Tax Administration Act explicitly facilitates profiling by the tax administration based on personal data when profiling is deemed necessary for the purpose of imposing targeted measures promoting compliance with the tax legislation. We return to this example in section 3.2 in connection with the discussion of to what extent the rights and values governing the digitalisation of the Norwegian public sector are protected within the emerging legal framework.

3.1.4 Pilot Schemes and Sandboxes

In addition to specific initiatives, there are overarching systems in place designed to accelerate the digitalisation of the public sector.

Central to AI adoption are the pilot programs for public administration and the government's emphasis on sandboxes. Norway has a unique law, the Act on Pilot Schemes by Public Administration of 1993 (Lov om forsøk i offentlig forvaltning (forsøksloven)), which is designed to foster experimentation within the public sector. This law aims to cultivate efficient organizational and operational capabilities in public administration via trials or experiments and seeks to optimize task distribution among various administrative bodies and levels. A significant focus lies in enhancing public service delivery, ensuring optimal resource use, and fostering robust democratic governance (Article 1).

Under this legislation, particularly Article 3, public agencies can request the Ministry of Local Government and Modernisation for permission to deviate from prevailing laws and regulations. This provision provides them with the flexibility to experiment with novel organizational methods or task executions for up to four years. Such trial periods can receive extensions of up to two years, and if there are impending reforms aligned with the trial's objectives, the duration can be extended until the reforms become operational. In 2021, Oxford Research conducted the first review of the Pilot Scheme Act since its enactment in 1993 and concluded that the Act is little known and rarely used.

Evaluering og utredning av forsøksloven. / Oxford Research. 2021.

Evaluering og utredning av forsøksloven. / Oxford Research. 2021, p. 1.

The National AI Strategy highlights that the government plans to release a white paper assessing if the Pilot Scheme Act offers ample leeway to trial innovative AI-based solutions.

National AI Strategy, p. 24.

Evaluering og utredning av forsøksloven. / Oxford Research. 2021, p. 40.

Therefore, as the Pilot Scheme Act stands today, experiments with AI would not be feasible, in part because of the exception related to confidentiality and citizens' rights and obligations.

Evaluering og utredning av forsøksloven. / Oxford Research. 2021, p. 52.

Evaluering og utredning av forsøksloven. / Oxford Research. 2021, p. 40.

Evaluering og utredning av forsøksloven. / Oxford Research. 2021, p. 53.

Moreover, the Norwegian government has been a strong proponent of using regulatory ‘sandboxes’ to foster innovation across diverse sectors. In 2019, the Norwegian Financial Supervisory Authority (Finanstilsynet) created a sandbox specifically for financial technology (fintech). This initiative aimed to deepen the Financial Authority’s grasp of emerging technological solutions in the financial sector and simultaneously enhance businesses' understanding of regulatory requirements for new products, services, and business models.

National AI Strategy, p. 24.

National AI Strategy, p. 24.

In 2022, the sandbox strategy was broadened to cover privacy and AI with the creation of the ‘Sandbox for Responsible AI’. Overseen by the Norwegian Data Protection Authority, this endeavour aims to boost AI innovation within Norway.

Regulatory Privacy Sandbox. Datatilsynet https://www.datatilsynet.no/en/regulations-and-tools/sandbox-for-artificial-intelligence/

3.2 Adequacy of Current (or Emerging) Framework in Strengthening Values and Rights

As described in the previous section, the legal framework governing the Norwegian public sector does not entail a holistic approach to digitalisation or AI technologies. Consequently, there are few laws that specifically address the potential negative impacts of digitalisation on the fundamental rights and values upon which the Norwegian constitutional democracy is founded. This has led to criticism from stakeholders suggesting that government initiatives are not backed by adequate safeguards to protect fundamental rights, democracy, and the rule of law. In the following, we discuss the current and emerging legal framework’s ability to enhance the values and rights that were highlighted in section 2.2, which ought to govern the digitalisation of the Norwegian public sector.

3.2.1 Privacy and Data Protection

Although the government's strategies for digitalisation of the public sector and AI emphasize the importance of user privacy, the Commission for Data Protection (Personvernkommisjonen) has highlighted shortcomings in effectively addressing data protection issues.

NOU 2022: 11, p. 61.

First, there is an absence of a unified approach to privacy across public administration. As it stands, no single public agency bears overarching responsibility for assessing the aggregate use of personal data in public services. Current evaluations tend to be conducted within the confines of individual sectors or as part of specific legislative or regulatory efforts. This fragmented approach results in a glaring absence of a holistic overview concerning the collection, use, and further processing of personal data within public administration.

NOU 2022: 11, p. 71–72.

NOU 2022: 11, p. 73.

Second, and closely related to the first point, there exists a noticeable gap in establishing a comprehensive framework for assessing the impact of legislative changes on user privacy.

NOU 2022: 11, p. 73–74.

NOU 2022: 11, p. 75.

NOU 2022: 11, p. 75.

NOU 2022: 11, p. 75.

NOU 2022: 11, p. 72.

Third, the Commission draws attention to the widespread use of broad legal bases for the processing of personal data by public agencies.

NOU 2022: 11, p. 73–74.

NOU 2022: 11, p. 81.

Another area of concern relates to the legal provisions allowing public agencies to implement automated decisions, as specified in GDPR Article 22(2)(b). This article provides exceptions for the use of automated decisions if permitted by member states' laws. The report notes that as of Spring 2022, there have been more than 16 laws and ministerial orders in Norway that permit such automated decisions by public agencies.

NOU 2022: 11, p. 189.

NOU 2022: 11, p. 189–90.

Fourth, there is a notable deficiency in essential routines and expertise for assessing the impact of digitalisation on data security.

NOU 2022: 11, p. 61

NOU 2022: 11, p. 77.

While these challenges specifically pertain to data protection issues, they also underscore the broader absence of an adequate framework to strengthen the democratic process and rule of law. Notably, the lack of Parliamentary oversight for many of these changes, as well as the absence of impact assessments for fundamental rights, are of particular concern and have implications that extend to other areas. Other scholars share these concerns identified by the Commission. For example, Broomfield and Lintvedt criticise some of the changes introduced in 2021 to the Tax Administration Act, which granted the Tax Administration Office a legal basis to process personal data for activities like compilation, profiling, and automated decision-making.

Snubler Norge inn i en algoritmisk velferdsdystopi? / Broomfield, Heather and Lintvedt, Mona Naomi in Tidsskrift for velferdsforskning, 25/3 2022.

Snubler Norge inn i en algoritmisk velferdsdystopi? / Broomfield, Heather and Lintvedt, Mona Naomi in Tidsskrift for velferdsforskning, 25/3 2022, p. 8.

Snubler Norge inn i en algoritmisk velferdsdystopi? / Broomfield, Heather and Lintvedt, Mona Naomi in Tidsskrift for velferdsforskning, 25/3 2022.

In contrast, as described in the previous section, the provisions facilitating fully automated decision-making in the Labour and Welfare Administration do not address the use of AI for profiling or other processes if this requires discretionary assessment, such as when determining benefits. This qualification to exclude the use of automated processing to make decisions based on discretionary criteria is partially motivated by the protection against non-discrimination, as recognized under § 98 of the Constitution and Article 14 of the ECHR, as well as individuals' data privacy rights, particularly their right against solely automated decisions that have significant impact. However, it is becoming increasingly evident that the use of outputs from automated processing of personal data, such as categorizing people into risk groups based on profiling, can have a significant impact on individuals, even though the decision is ultimately made by a human being. In her study, Lintvedt points out that process-leading decisions, such as selections for inspection, can be of such an intrusive nature that it could have a similar impact on the individual as a decision.

Kravet til klar lovhjemmel for forvaltningens innhenting av kontrollopplysninger og bruk av profilering. / Lintvedt, Mona Naomi. Utredning for Personvernkommisjonen. 2022.

Certain courts have begun evaluating the implications of risk assessment systems. A notable example occurred in February 2020, when the District Court of The Hague handed down a landmark decision concerning the controversial System Risk Indication (SyRI) algorithm deployed by the Dutch government.

Automated Decision-Making Under the GDPR: Practical Cases from Courts and Data Protection Authorities. / Vale, Sebastião Barros and Fortuna, Gabriela Zanfir. Future of Privacy Forum. 2022.

The Court concluded that even though the use of SyRI does not inherently aim for legal effect, a risk report significantly impacts the private life of the individual it pertains to. This determination, coupled with other findings like the system's lack of transparency, led the Court to rule that the scheme violated Article 8 of the ECHR, which safeguards the right to respect for private and family life. However, the Court refrained from definitively answering whether the precise definition of automated individual decision-making in the GDPR was met, or whether one or more of the GDPR's exceptions to its prohibition applied in this context.

A German Court has referred this issue to the CJEU for resolution. The case pertains to the business model of SCHUFA, a German credit reference agency. SCHUFA provides its clients, including banks, with information about consumers' creditworthiness using ‘score values.’

Case C-634/21 Request for a preliminary ruling from the Verwaltungsgericht Wiesbaden (Germany) lodged on 15 October 2021 – OQ v Land Hesse

The CJEU has confirmed that generating credit scoring will be covered by Article 22(1) if a third party (e.g a bank) ‘draws strongly’ on that score to make decisions about whether to grant a loan or not. See Case C‑634/21 REQUEST for a preliminary ruling under Article 267 TFEU from the Verwaltungsgericht Wiesbaden (Administrative Court, Wiesbaden, Germany) ECLI:EU:C:2023:95, para 73.

3.2.2 Environmental Well-Being

In a digitalisation context, the implication of Article 112 of the Norwegian Constitution is that decisions concerning digitalisation measures must take the environmental impact of the measure into account. This might involve assessing the energy consumption of digital technologies. In theory, environmental impact assessments could be decisive when choosing between different solutions to implement. Such considerations could also influence the direction of future research and development initiatives supported by the Norwegian state. For instance, due to the substantial energy consumption involved in training machine learning algorithms using large datasets, the Norwegian public sector might be inclined to support initiatives that either rely on or develop innovative approaches to machine learning using smaller datasets. Currently, the ability of machine learning from smaller datasets to achieve the necessary predictive accuracy for most tasks in the public sector is limited. However, if the potential for machine learning from small data improves in the future, perhaps approximating but not quite achieving the same level of accuracy as AI systems based on big data, a trade-off might emerge. This trade-off could involve choosing between technology that offers the highest level of accuracy or opting for technology that performs slightly less accurately but has a lower environmental impact.

3.2.3 Transparency and Explainablity

The Norwegian legal framework has various provisions mandating transparency and explainability of public-sector decision-making. The PAA § 25 demands that individual decisions must be justified. The justification should refer to the relevant rules and factual circumstances. As regards criteria that involve the exercise of discretion, the justification must describe the main considerations determining the outcome of the discretionary assessment. Additionally, if the use of AI involves personal data, there are additional requirements for transparency and for providing information to those about whom the data is being used (GDPR Articles 5(1(a), 12–14).

It is widely recognized that the use of ‘black-box’ AI systems to support or automate administrative decision-making might have a negative impact on the values and rights pertaining to transparency and explainability in the public sector. While the legal framework in Norway does not specifically address these impacts of AI systems, the general requirement that individual decisions need to be properly explained with reference to the content of discretionary considerations entails a boundary for the use of black-box AI systems in this context. Even if such AI systems are used only as decision support, this might contradict an individual’s right to an explanation of the decisive considerations. Consequently, further research is needed to develop explainable AI particularly as regards discretionary criteria that may be involved in public-sector decision-making.

3.2.4 Non-Discrimination, Equality, and Digital Inclusion

The central non-discrimination law in Norway is the 2017 Equality and Non-Discrimination Act. Applicable to all sectors, the Act establishes in § 6 a prohibition against discrimination based on ‘gender, pregnancy, leave for birth or adoption, caregiving responsibilities, ethnicity, religion, worldview, disability, sexual orientation, gender identity, gender expression, age, or combinations of these grounds.’

Concern about the impact of digitalisation on equality and non-discrimination is particularly salient in relation to AI technologies. In the international discourse on the use of AI systems in the public sector, the risk of discrimination due to biases in AI systems is a prominent concern, often referred to as ‘algorithmic discrimination’.

E.g., Teaching Fairness to Artificial Intelligence: Existing and Novel Strategies Against Algorithmic Discrimination Under EU Law. / Hacker Philipp. In: Common Market Law Review, Vol. 55, No. 4, 2018; Tuning EU Equality Law to Algorithmic Discrimination: Three Pathways to Resilience. / Xenidis, Raphaële. In: Maastricht Journal of European and Comparative Law, Vol. 27, No. 6, 2020, p. 736–758.

There are no specific provisions addressing algorithmic discrimination in current Norwegian law, but Norwegian non-discrimination law is technology-neutral and applicable to decision-making where AI is involved. As regards important concerns related to algorithmic discrimination, there are certain strengths and weaknesses of Norwegian non-discrimination law which are worth highlighting.

One strength is the Equality and Non-discrimination Act’s clear prohibition of intersectional discrimination. Intersectional discrimination occurs if a person is discriminated against because of a combination of protected characteristics, for example, if a provision or practice is specifically detrimental to persons of a particular ethnic background who also have a particular sexual orientation.

Prop. 81 L (2016–2017) Lov om likestilling og forbud mot diskriminering (hereinafter ‘Prop. 81 L (20116–2017), p. 113.

Gender shades: Intersectional accuracy disparities in commercial gender classification / Buolamwini, Gen Joy and Gebru, Timnit. In: Proceedings of the 1st Conference on Fairness, Accountability and Transparency, PMLR 81, 2018, p. 77–91.

Another clear strength of the Equality and Non-Discrimination Act when it comes to potential algorithmic discrimination in the public sector is the emphasis on proactive measures to prevent discrimination. According to Article 24 of the Act, public authorities are obligated to make “active, targeted and systematic efforts to promote equality and prevent discrimination”. This implies that public authorities in Norway are legally obligated to address the issue of algorithmic discrimination before implementing AI technologies. Furthermore, the provision in § 24 specifies that the measures shall be aimed at counteracting stereotyping, which is a widespread concern associated with AI technologies.

In addition to the general provisions pertaining to non-discrimination, the Equality and Non-Discrimination Act stipulates requirements for universal design of ICT systems. Universal design is an important way of providing reasonable accommodation in the access to public services by persons with disabilities. It entails, for example, enlarging text, reading text aloud, captioning audio files and videos, providing good screen contrasts, and creating a clear and logical structure.

Prop. 81 L (2016–2017), p. 325.

Digital Strategy for the Public Sector 2019–2025, p. 18.

However, there are also issues related to AI bias that Norwegian non-discrimination law is less prepared to tackle. For instance, academic literature on AI bias discusses the possibility that algorithms might discriminate against other groups than those protected by non-discrimination laws, despite being worthy of protection.

E.g., The theory of artificial immutability: Protecting algorithmic groups under anti-discrimination law. / Wachter, Sandra. In: Tulane Law Review, Vol. 97, No. 2, 2022, p. 149–204, p. 149.

Protected Grounds and the System of Non-Discrimination Law in the Context of Algorithmic Decision-Making and Artificial Intelligence Articles and Essays. / Gerards, Janneke and Zuiderveen Borgesius, Frederik. In: Colorado Technology Law Journal, Vol. 20, No. 1, 2022, p. 1–56.

Prop. 81 L (2016–2017), p. 97.

Another potential weakness is arguably the wide possibility of justification of potentially discriminatory behaviour under Norwegian non-discrimination law. Justification is generally possible regardless of whether a decision-making process constitutes potential direct or indirect discrimination. In comparison, the EU Equality Directives permit justification of potential direct discrimination only in exceptional circumstances that are specifically described in the relevant directives.

3.2.5 Safety and Security

Various laws, including Articles 5(1(f)) and 32 of the GDPR, impose security requirements when software and/or AI systems process personal data. In addition, a core principle in the National AI strategy is that ‘cyber security should be built into the development, operation and administration of systems that use AI’. The National Strategy for Digitalisation of the Public Sector also ‘requires that cyber security be integrated into the service development, operation and management of common IT solutions, in accordance with the objectives of the National Cyber Security Strategy for Norway’.

Digital Strategy for the Public Sector 2019–2025, p. 8

Despite this, in recent years, several incidents have highlighted vulnerabilities in the cyber and data security of public agencies in Norway. A prominent example is the cyber-attacks on the Norwegian Parliament (Stortinget). In September 2020, the Parliament faced a significant cyberattack, leading to several MPs and staff members' email accounts being compromised and various amounts of data being extracted.

Cyberattack on the Storting. / Storting. 03 Sep 2020. https://www.stortinget.no/nn/In-English/About-the-Storting/News-archive/Front-page-news/2019-2020/cyberattack-on-the-storting/

New cyberattack on the Storting. / Storting. 11 March 2021 https://www.stortinget.no/nn/In-English/About-the-Storting/News-archive/Front-page-news/2020-2021/new-cyberattack-on-the-storting/

Local administrative bodies also experienced security breaches. Notably, the Norwegian Data Protection Authority imposed a fine on the Municipality of Østre Toten due to insufficient information security.

Municipality of Østre Toten fined. / Datatilsynet. 7 June 2022 https://www.datatilsynet.no/en/news/aktuelle-nyheter-2022/municipality-of-ostre-toten-fined/

The report from the Commission for Data Protection underscores that these failures are affecting the trust in public administration.

NOU 2022: 11, p. 61.

3.3 Emerging Trends and Challenges

Based on the abovementioned examples of legislative efforts to facilitate digitalisation in the Norwegian public sector, certain trends can be identified. One salient trend is the focus on creation of specific provisions providing a legal basis for certain data processing operations. This tendency can be traced back to the fact that there is high awareness of the potential impact of digitalisation on privacy and data protection in the National Digitalisation Strategy and in the legislative work that has been done so far. Particularly, the legislature has been mindful of the need for a legal basis for data sharing/re-use and automated decision-making.

However, Norway does not currently have a holistic approach to the regulation of digitalisation generally or AI technologies, specifically. The examples we have mentioned of laws facilitating digitalisation are piecemeal examples. If one compares the legislative amendments that have been implemented to the principles and values mentioned in section 2.2, which ought to guide digitalisation efforts in Norway, it appears that the parts of the legal framework that have been adjusted to accommodate digitalisation focus more narrowly on data protection-related issues.

The legislative trends we have observed have important limitations when it comes to the question of to what extent they facilitate digitalisation. The legislation pertaining to the Labour and Welfare Administration and Tax Administration has been amended with provisions concerning fully automated decision-making, but these amendments currently only foresee hard-coded software systems. These systems tend to be highly predictable and explainable and, thus, they do not invoke the same concerns in relation to the rights and values mentioned in section 2.2 as more advanced AI systems do. Arguably, the use of AI as decision support raises more profound concerns than full automation based on hard-coded software programs. Yet, regulatory provisions pertaining to AI systems intended for decision support are largely absent in the current and emerging legal framework in Norway.

From the perspective of the Norwegian legislature, the existence of legal provisions in public administration law that contain discretionary criteria have been highlighted as a challenge to the automation of public administration. It has been argued that regulations suitable for automated administrative proceedings ought to be machine-readable so that they can be applied by AI-systems.

National AI Strategy, p. 21.

National AI Strategy, p. 21–22.

Another trend discernible across numerous policy documents from Norwegian authorities appears to be the inclination towards viewing digitalisation and technology as instrumental in ensuring citizens' rights. The government's AI strategy emphasizes the role of automation as an important element in its endeavour to uphold and promote citizens' constitutional and fundamental rights.

“Automation can also promote equal treatment, given that everyone who is in the same situation, according to the system criteria, is automatically treated equally. Automation enables consistent implementation of regulations and can prevent unequal practice. Automated administrative proceedings can also enhance implementation of rights and obligations; for example, by automatically making decisions that grant benefits when the conditions are met. This can particularly benefit the most disadvantaged in society. More consistent implementation of obligations can lead to higher levels of compliance and to a perception among citizens that most people contribute their share, which in turn can help build trust.”

National AI Strategy, p. 26.

Some of the planned projects are also in line with this perspective. For example, one of the planned digitalisation projects, namely the Digitalising the right to access, aims to create platform that gives citizens an overview, insight and increased control over their own personal data. There is a similar tendency to view AI deployment as a way to address stereotypes and errors in human judgement, thereby aiming to ensure equal treatment.

Snubler Norge inn i en algoritmisk velferdsdystopi? / Broomfield, Heather and Lintvedt, Mona Naomi in Tidsskrift for velferdsforskning, 25/3 2022, p. 5–6.

Some scholars point out that the government’s policy overwhelmingly favours AI, with few reservations.

Snubler Norge inn i en algoritmisk velferdsdystopi? / Broomfield, Heather and Lintvedt, Mona Naomi in Tidsskrift for velferdsforskning, 25/3 2022, p. 6.

In this case, the so-called ‘System Risk Indication’ (SyRI) was developed as a government tool to alert the Dutch public administration about the fraud risk of citizens.

High-Risk Citizens. / Braun, Ilja. Algorithm Watch, 4 July 2018 https://algorithmwatch.org/en/high-risk-citizens/ . Why the resignation of the Dutch government is a good reminder of how important it is to monitor and regulate algorithms. / Elyounes, Doaa Abu.. Berkman Klein Center Medium Collection, 10 February 2021. https://medium.com/berkman-klein-center/why-the-resignation-of-the-dutch-government-is-a-good-reminder-of-how-important-it-is-to-monitor-2c599c1e0100

Related to the aforementioned trend is the emphasis on rule-based AI systems as a means to alleviate threats to human rights, especially regarding transparency and discrimination concerns. For instance, the national strategy for AI notes that a characteristic shared by ‘all current automated case management systems is that they are rule-based.’

National AI Strategy, p. 26.

National AI Strategy, p. 26.

However, it is worth noting that rule-based systems might exhibit discrimination arising from biases embedded within the rules themselves. For example, if driving between 3 to 5 PM is associated with a higher risk of drunk driving and consequently linked to higher insurance premiums, such rules could unintentionally discriminate against individuals working lower-wage jobs, like janitors, who may be driving early in the morning due to their work schedules. Likewise, an overly specific rule-based system might perform poorly when introduced to new data, resulting in potential discrimination. Hence, while rule-based AI systems offer benefits in terms of transparency and explainability, they also necessitate careful consideration of potential discrimination risks. Again, the Dutch welfare scandal is an example of how human bias can infiltrate AI systems. The fraud detection system was deliberately deployed only in poorer neighbourhoods. This in turn reinforced the algorithm to associate people with immigrant backgrounds as high risk. A Dutch Court determined that merely deploying the system to target poor neighbourhoods constitutes discrimination based on socioeconomic or immigrant status.

Welfare surveillance system violates human rights, Dutch court rules. / Henley, Jon and Booth, Robert. IN: The Guardian, 5 February 2020. https://www.theguardian.com/technology/2020/feb/05/welfare-surveillance-system-violates-human-rights-dutch-court-rules

4. Impact of Proposed EU AI Act

This section assesses how the proposed EU regulation on artificial intelligence (the AI Act) will supplement national administrative law and to what extent it (sufficiently) will alleviate the challenges we have identified. Specifically, it explores the impact of the AI Act from two perspectives: Firstly, how the Act addresses the challenges concerning human rights protection, and secondly, how it aids in overcoming the barriers to AI adoption by public agencies.

4.1 The Impact of the Proposed AI Act in Strengthening Human Rights Protection

Section 3.1 evaluates the current national legal framework concerning AI adoption by public agencies and the protection of citizens from AI-related harms. Challenges remain in effectively safeguarding citizens' rights in the specific context of digitalisation. This has been highlighted by the Commission for Data Protection, especially in terms of data protection and privacy. However, this overarching weakness in the national framework extends to other areas as well. In this regard, the discussion in section 3.2 has shown the limitations of existing laws in addressing new discrimination harms associated with AI systems.

The AI Act could be pivotal in addressing many of these concerns. The proposed AI Act is geared towards promoting human-centric AI, ensuring its development respects human dignity, upholds fundamental rights, and ensures the security and trustworthiness of AI systems.

Proposal for a Regulation of the European Parliament and of the Council Laying Down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act) and Amending Certain Union Legislative Acts COM(2021) 206 Final (hereinafter Proposed AI Act)

The proposed AI Act adopts a risk-based approach, categorizing AI systems into four risk levels: (1) ‘unacceptable risks’ (that lead to prohibited practices), (2) ‘high risks’ (which trigger a set of stringent obligations, including conducting a conformity assessment), (3) ‘limited risks’ (with associated transparency obligations), and (4) ‘minimal risks’ (where stakeholders are encouraged to follow codes of conduct).

Explanatory Memorandum to the Commission’s AI Act Proposal, p. 12.

Most of the prohibited practices concerning AI usage are directed at public agencies. This encompasses the use of real-time biometric identification and social scoring. Similarly, most of the stand-alone high-risk AI applications focus on public agencies' use of AI in the following areas: access to and enjoyment of essential services and benefits, law enforcement, migration, asylum, and border management, administration of justice and democratic processes. Clearly, the public administration sector is under scrutiny, and many of these provisions aim to enhance the protection of individuals from harms within this domain.

Examining the prohibited practices, the AI Act addresses two primary categories of AI systems used by public agencies. First is the use of real-time biometric identification by public agencies for law enforcement purposes. While biometric identification includes fingerprints, DNA, and facial features, the prohibition notably emphasizes facial recognition technology. A system that would fall under this prohibition might be an expansive CCTV network on public streets integrated with facial recognition software. The deployment of such systems has significant ramifications for individual rights, including data protection, privacy, freedom of expression, and protection against discrimination. Facial recognition technology possesses the capability to process and analyse multiple data streams in real time, enabling large-scale surveillance of individuals, subsequently compromising their rights to privacy and data protection. The pervasive nature of this surveillance can also influence other foundational rights, such as freedom of expression and non-discrimination. The omnipresence of surveillance tools may inhibit individuals from voicing their opinions freely. People tend to self-censor and alter their behaviour when they feel overly surveilled. Similarly, in most cases, the negative impact of AI-driven surveillance is felt acutely by the marginalized groups in the population. Thus, strengthening existing safeguards against potential harms from facial recognition technology is vital.

Another prohibited practice pertinent to public administration is social scoring. The AI Act prohibits public authorities from employing AI systems to generate 'trustworthiness' scores, which could potentially lead to unjust or disproportionate treatment of individuals or groups. This prohibition seems inspired by the Chinese Social Credit System, where the government assigns scores to citizens and businesses based on various factors, including financial creditworthiness, compliance with laws and regulations, and social behaviours.

China's 'social credit' system ranks citizens and punishes them with throttled internet speeds and flight bans if the Communist Party deems them untrustworthy. / Canales, Katie and Mok, Aaron. IN: Business Insider, 28 Nov 2022.

Indeed, Norwegian law already outlines certain restrictions on AI use by public agencies, even before the introduction of the AI Act. There are existing laws that prevent public agencies from making specific decisions using AI. A prime example is the limited scope of the NAV Act, Article 4 a. While this provision is meant to facilitate automated decision-making, it does not facilitate the use of AI technologies. It prevents NAV from using fully automated decision-making except for cases where the applicable criteria are absent of discretion and the outcome of the decision is obvious. This is grounded in the belief that methods capable of automating decisions relying on more discretionary criteria (i.e, in practice, advanced AI systems) present ‘a greater risk of unjust and unintended discrimination.’

Prop. 135 L (2019–2020), Chapter 5.3.1.

In contrast, while the AI Act categorizes AI systems intended for these purposes as high-risk systems, it permits the placement of such systems on the market. Hence, a certain tension arises between the legal framework in Norway and the AI Act’s ambition for harmonization. While Norwegian law does not permit certain uses of AI in the public sector due to concerns about the risks of discrimination (among other concerns), the AI Act assumes that these risks are sufficiently addressed if the requirements pertaining to high-risk AI systems are complied with. There may be good reasons for limiting the use of AI systems through national legislation, but it is worth questioning whether such limitations remain justified when they rely on risks that are addressed by the AI Act. Going forward, we would advise Norwegian legislators to consider this aspect of the relationship between the AI Act and national legislation.

Many AI systems pertinent to the public administration sector fall under the AI Act’s high-risk category. For example, this includes public agencies' use of AI in distributing benefits, making decisions in immigration and border control, law enforcement, and infrastructure management. In this context, the requirements for conducting risk assessments, ensuring human oversight, maintaining data quality, and adhering to cybersecurity standards will bolster protection against potential harms. These obligations are especially significant for countries like Norway, which boasts a vast public administration sector and a comprehensive social safety net. Given this context, AI could play a pivotal role in the government's initiatives to modernize and optimize the welfare system. The discussions in section 1, detailing implemented and planned projects, underscore the use of AI in automating decisions related to citizenship applications, NAV's ongoing project to leverage AI in predicting the duration of sick leaves, and Lånekassen’s use of AI in student loan applications. Similarly, many of the ongoing AI projects in the health sector would also qualify as high-risk AI systems. In this context, the above-mentioned requirements for high-risk AI systems are crucial in strengthening the protection of human rights. For instance, requirements assessing the relevance and representativeness of data can mitigate potential biases embedded in datasets. Requirements on human oversight and involvement can help public agencies detect and rectify potential biases. While reflecting overarching rights and values that are protected by general provisions in Norwegian law, these legal requirements address AI technologies and associated risks at a level of specificity that is currently not found in the Norwegian framework.

The Dutch welfare scandal serves as a stark example of public agencies deploying AI systems without essential safeguards. This system was notoriously opaque. When the non-profit organization 'Bij Voorbaat Verdacht' requested insights into the software's evaluation criteria for welfare abuse, the government countered that disclosing such information might aid potential wrongdoers. The absence of human oversight was glaringly evident, as even minor omissions in filling a form led to high-risk classifications. The provisions of the AI Act on risk assessment, transparency, and human oversight could likely have averted or lessened the repercussions of this scandal.

In Norway, a report by the Data Protection Authority highlighted that the Norwegian Tax Authority has developed a predictive tool to aid in the selection of tax returns for potential discrepancies or tax evasion.

Artificial intelligence and privacy. / Datatilsynet. 2018, p. 12

Artificial intelligence and privacy. / Datatilsynet. 2018, p. 12

The obligations for high-risk AI systems introduced by the AI Act also complement and address some of the gaps present in the GDPR. One significant area where the AI Act provides additional clarity is concerning decisions that, while not entirely automated, could have substantial impacts, such as credit scoring. As highlighted earlier, the study commissioned by the Commission for Data Protection underscores that process-driven decisions, like selections for inspections, can be so intrusive that they might equate to a ‘decision’ in their impact on an individual.

Kravet til klar lovhjemmel for forvaltningens innhenting av kontrollopplysninger og bruk av profilering. / Lintvedt, Mona Naomi. Utredning for Personvernkommisjonen. 2022.

Proposed AI Act, Annex III (5(a)).

Despite this, many civil society organizations, including Amnesty and Human Rights Watch (HRW), have criticized the inadequate human rights safeguards, especially considering governments' increasing use of AI to deny or limit access to lifesaving benefits and other social services. This exacerbates existing concerns over inequality and the digital divide. For instance, HRW conducted a detailed study on the AI Act’s impact on the distribution of social security and highlighted the following:

'While the EU regulation broadly acknowledges these risks, it does not meaningfully protect people’s rights to social security and an adequate standard of living. In particular, its narrow safeguards neglect how existing inequities and failures to adequately protect rights – such as the digital divide, social security cuts, and discrimination in the labour market – shape the design of automated systems and become embedded by them.'

Q&A: How the EU’s Flawed Artificial Intelligence Regulation Endangers the Social Safety Net. / Human Rights Watch. 2021, p. 3.